I graduated from the U.S. Air Force’s Aircraft Mishap Investigation Course in 1985, and although I’ve only written two official accident reports, the course changed the way I fly airplanes and evaluate such documents. If you have anything to do with the ownership, management, scheduling or any other aspect of operating airplanes, the words “the organization failed” should concern you. That word, “failed,” doesn’t seem to be used as often as deserved.

The most important lesson in an aircraft accident investigation is that you’ve not gotten to the bottom of things until you can point to someone involved who failed. Spoiler alert: That someone isn’t always the pilot. In fact, it rarely is.

In today’s politically correct society, finger-pointing is discouraged. We want to find out what is wrong but not who is wrong. If you are in the business of making everyone happy, that might be the right approach. But if you are in the business of accident prevention, who is wrong is precisely what needs to be determined. And you will almost always have to cast your aim higher than at the two people sitting in Row One of an airplane. Sure, the pilots have a great deal to do with every aircraft accident. But as Capt. Warren Vanderburgh, author of the original American Airlines series about automation dependency, says, “We made you that way.” And the “we” to whom he refers is the organization.

The Airline Failed

Vanderburgh was a U.S. Air Force pilot for 27 years and followed that with 32 years at American where he earned rock star status at the company’s Flight Academy. I think his reputation as an exceptional instructor pilot was well deserved and his teaching on automation dependency has changed the way many of us approach cockpit automation. As he said, we are “children of the magenta.” He describes several examples of pilots in his simulator failing to drop down levels of automation to prevent disaster. After each story he says, “I am so sorry, I did not mean to make you like this.”

But he is also credited with another course called the Advanced Aircraft Maneuvering Program, which can be summed up by saying: Fly the airplane; don’t let the airplane fly you. The program received some criticism from NASA, Boeing, Airbus and the FAA for the use of rudder in correcting bank angles. This might work on smaller aircraft but can lead to disastrous results in a large, transport category airplane. Some who attended the course deny that the use of rudder was encouraged, but others complained that it was.

I spoke with a member of the NTSB investigating the accident that follows who says the use of rudder was indeed sanctioned by the course. It is apparent that at least one pilot took away the idea that the aggressive use of rudder was acceptable. The result was the crash of American Airlines Flight 587, an Airbus A300, while departing New York’s JFK International (KJFK) on Nov. 12, 2001, after twice flying through the wake turbulence of a Boeing 747 and killing all 260 passengers and crew and five persons on the ground.

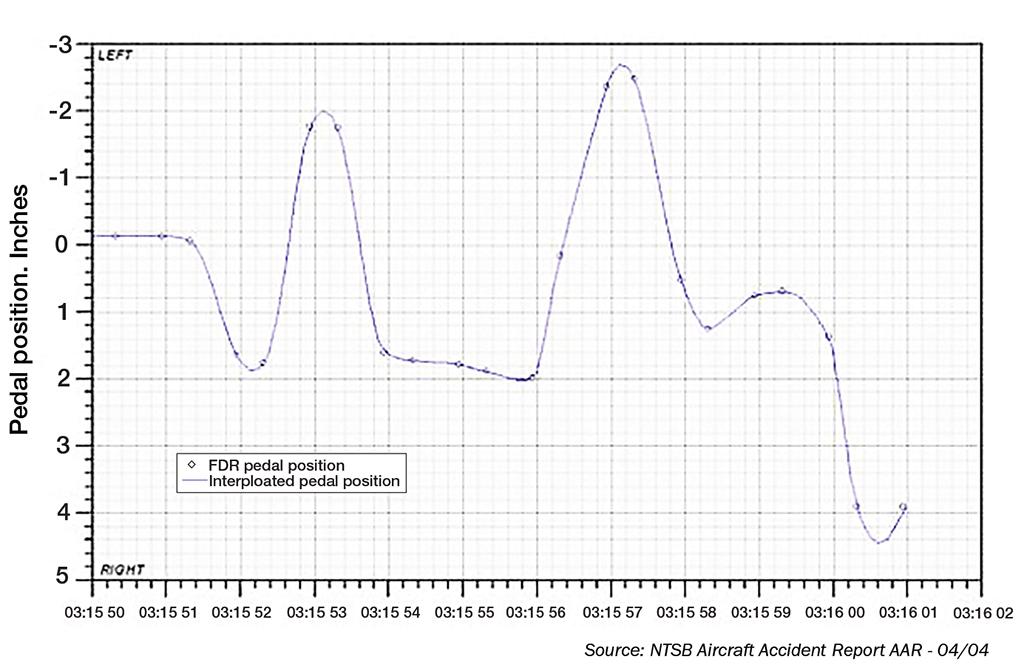

The first encounter was at low speed and the first officer’s (F/O) control inputs appeared to be perfectly normal. The second encounter was at 250 kt., the maximum permitted below 10,000 ft., and the F/O’s rudder inputs were larger and included several reversals. He didn’t understand that his aircraft’s full rudder deflection at that speed could be reached with as little as 1.25-in. rudder pedal movement and 10 lb. of pressure. He also didn’t understand the dangers of rapid rudder reversal.

Airbus, for its part, said the ailerons and spoilers were effective for roll control down to stall speed and to use rudder as necessary to avoid sideslip but not as a primary source of roll control. But looking for someone to blame, many singled out the advanced aircraft maneuvering course. I think Vanderburgh’s reputation with the company sank as a result, and that is unfortunate since many of his lessons remain valid to this day, especially the one that says, “We made you that way.”

The NTSB determined that the probable cause of the accident “was the inflight separation of the vertical stabilizer as a result of the loads beyond ultimate design that were created by the first officer’s unnecessary and excessive rudder pedal inputs.” They found as contributing factors the Airbus A300-600 rudder system design and elements of the airline’s Advanced Aircraft Maneuvering Program. If I were writing the accident report, I would say the cause was that the airline failed to ensure all of its pilots were properly trained in upset recovery. That may seem unfair in that most American Airlines pilots seemed to learn the correct lessons imparted by the company’s highly robust simulator upset recovery program. But at least one pilot never got the message. That is a common theme among very large organizations.

The Air Force Failed

I have a few years flying an Air Force aircraft that seemed ancient at the time but is still flying 40 years after I first took to the skies with it. The KC-135A Stratotanker was a forerunner of the venerable Boeing 707, though it began its life with the designation of Boeing 717. The aircraft became operational in 1956 and over the years included several variants; in all, 732 were built.

By the time I got into the airplane in 1980, 56 of them had been destroyed through crashes and other events the Air Force likes to call “mishaps.” That’s a loss rate of 2.24 aircraft per year. That rate held up through about 1990. Since that year, six have been lost, slicing the rate to one airplane every five years. The difference has mostly been attributable to technology: better engines, better avionics and adoption of many of the things the rest of the Boeing 707 world had taken for granted. For example, consider the humble yaw damper.

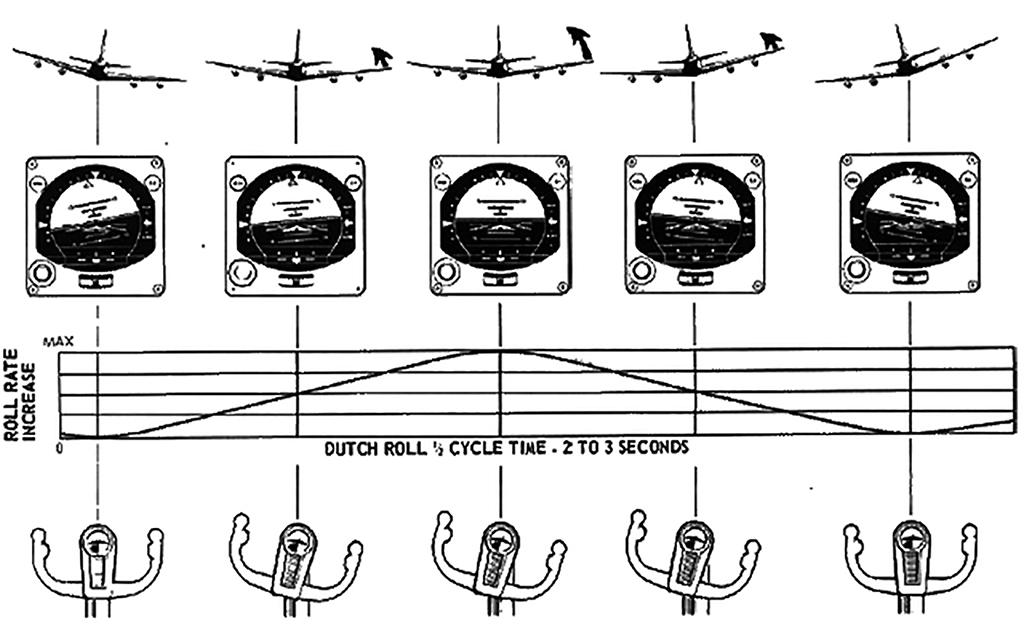

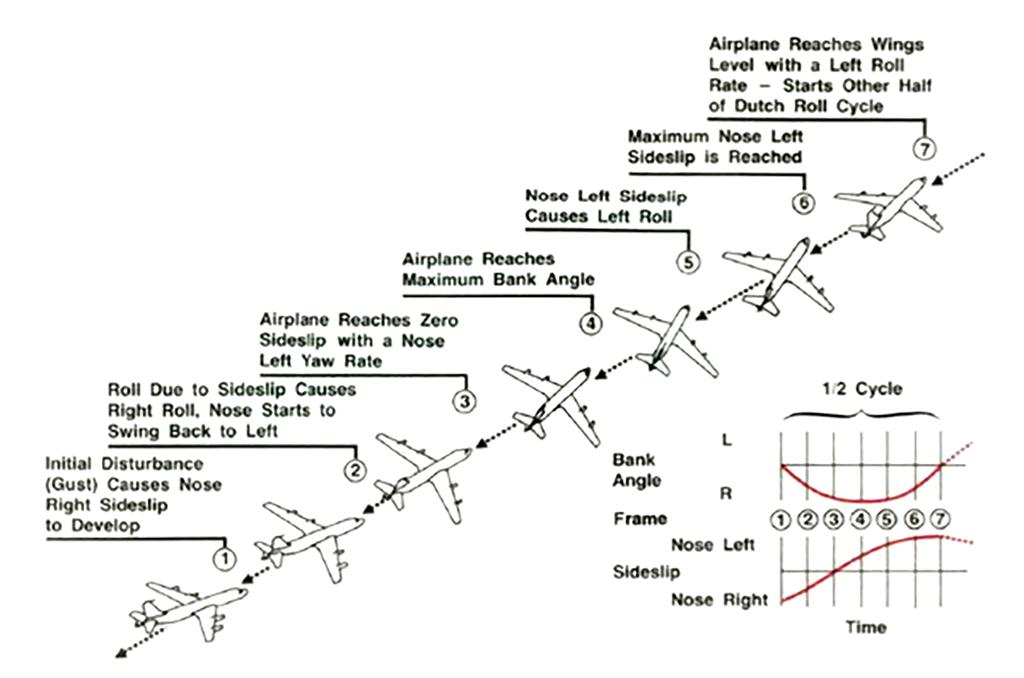

Thanks to aircraft with either benign Dutch roll tendencies or automatic yaw dampers, nowadays the subject of Dutch rolls tends to be an academic one. But not too long ago, dealing with it was a primary pilot skill for some airplanes. My favorite book on the topic is Handling the Big Jets by D.P. Davies. He writes, “Negative stability is potentially dangerous because sooner or later, depending on the rate of divergence, the aeroplane will either get out of hand or demand a constant very high level of skill and attention to maintain control.” His technique to get out of a Dutch roll is sound, but not so easy in practice:

“The control of a divergent Dutch roll is not difficult so long as it is handled properly. Let us assume that your aeroplane develops a diverging Dutch roll. The first thing to do is nothing — repeat nothing. Too many pilots have grabbed the aeroplane in a rush, done the wrong thing and made matters a lot worse. Don’t worry about a few seconds delay because it won’t get much worse in this time. Just watch the rolling motion and get the pattern fixed in your mind. Then, when you are good and ready, give one firm but gentle correction on the aileron control against the upcoming wing. Don’t hold it on too long — just in and out — or you will spoil the effect. You have then, in one smooth controlled action, killed the biggest part of the roll. You will be left with a residual wriggle, which you can take out, still on ailerons alone, in your own time.”

Ask anyone who has many hours flying aircraft with this type of negative stability and you will hear that it is no big deal, just counter with opposite aileron that you immediately reverse and ensure each opposite movement is smaller than the previous. Watching it happen, it looks like a flick of a wrist magically stops the Dutch roll. Now watch someone without the necessary experience, and you will see a sickening roll and yaw that increases in intensity, sometimes with fatal results.

Commercial airlines recognized this tendency in the Boeing 707 and quickly adopted yaw dampers that solved everything. The Air Force, in its own style, decided its pilots could handle the problem with a little training. And that training required a lot of skill and nerve from instructors, since the training could not be duplicated in simulators and had to happen very low to the ground.

On Aug. 27, 1985, an instructor pilot was flying with a student crew on their second training sortie at Beale Air Force Base, California (KBAB). A very good instructor pilot would let the new copilot get very low to the ground before ordering a go-around to maximize the training. Any copilot’s second flight in the KC-135A was bound to be exciting, with large bank angles low to the ground. This instructor pilot was very good but let the copilot stray too far and they ended up hitting the runway with their left outboard engine. The engine failed and the instructor took the airplane and executed a go around. The instructor’s control inputs were timely and correct until turning on downwind, when he allowed the airplane’s nose to climb too high. The airplane stalled, the aircraft banked sharply and dove to the ground. All seven on board were killed.

The Air Force mishap report cites as the first finding that the copilot “induced lateral instability while on short final” and that the instructor pilot “failed to adequately intervene.” Perhaps both those findings are true. But consider the copilot had about 200 hr. total flying time and a year of experience. The instructor pilot had 2,700 hr. and was tasked with allowing the situation to push right up to the boundaries of safety without crossing the line to unsafe.

If I were writing the report on that accident, I would have noted that the Air Force failed to install commercially available yaw dampers on the KC-135A fleet, even though they had been available for at least 20 years. Instead, the service required new KC-135A pilots to learn difficult Dutch roll compensation techniques that would take most pilots considerable experience to master. And I’d further note that the Air Force required its KC-135A instructor pilots to allow unqualified pilots fly the aircraft very near the margins of controlled flight in an attempt to teach those techniques.

To its credit, the Air Force came up with a very good course in asymmetric flight for KC-135A pilots and eventually installed very good yaw dampers. And that leads us to the second crash involving KC-135 yaw dampers.

In the late 1990s, the service upgraded the engines, avionics and much of the safety equipment of the KC-135A fleet, producing over 400 KC-135Rs. A full-time yaw damper made Dutch roll a thing of the past and Dutch roll techniques took on a much lower priority.

On May 3, 2015, a KC-135R departed Bishkek-Manas International Airport (UCFM) in Kyrgyzstan for a combat aerial refueling mission. A flight control malfunction during takeoff caused the aircraft’s nose to slowly drift from side to side, or “rudder hunt.” The crew did not properly diagnose the condition and the rudder hunt progressed into a Dutch roll. The crew initiated a turn to their on-course heading, using a small amount of rudder. The use of rudder increased the aircraft’s oscillatory instability. The Dutch roll very quickly increased to a point at which the aircraft’s tail section separated from the aircraft, which crashed, killing all three crewmembers.

The aircraft flight manual called for either turning off the yaw damper or rudder power to reduce the rudder hunt and prohibited the use of rudder in this situation. The Air Force investigation determined that the aircraft was still in a flyable condition when the pilot applied and varied left rudder pressure several times and then reversed pressure to the right. The pilot was found “causal” because of the inappropriate rudder inputs. If I were writing the accident report, I would say that the Air Force failed to train its pilots to adequately recognize the conditions of Dutch roll in an airplane prone to it, and how to handle the condition manually.

So far, I have pointed fingers and second guessed the actions of an airline and of a very large military organization. Bureaucracies seem prone to these kinds of things. But so, too, are smaller organizations in which there is some distance between decision makers and those carrying out those decisions.

The Organization Failed

I have flown for a few organizations in which an executive assistant simply called a scheduler with a foreign destination in mind and assumed everything would be just as safe and routine as a hop to domestic airport. In my experience, however, a word of caution from the captain was always heeded: “I can’t take you to your ski holiday in Switzerland without some simulator training first,” or “Your airplane is not allowed into London City because it isn’t approved for steep approaches,” or “I am not willing to fly to that valley airport at night or in the weather because they don’t have radar coverage.” In every example, the pilot’s word prevailed. But that isn’t always the case.

On Sept. 4, 1991, Gulfstream GII N204C, which was owned by E.I. du Pont de Nemours & Co. and leased by Conoco, crashed while attempting to land at Kota Kinabalu Airport (WBKK), Malaysia on what should have been a routine fuel stop. It appears the pilots were uncertain of their position while descending on an instrument approach and executed a go around in a lackadaisical manner. One pilot was recorded saying, “I don’t like what we got here, I’m climbing this sucker outta here.”

A cursory look at the events of that day reveals the pilots were undisciplined in their instrument procedures, and failed to plan their descent to properly execute the approach from the correct altitude and at the expected clearance limit. Their radio phraseology was poor and nonstandard. So, the accident resulted from the pilots erring multiple times. But that is just the tip of the iceberg.

The case of N204C is murky because the official accident report isn’t available, the first draft of the accident history was written by the company’s legal team, and the Flight Safety Foundation used that draft for a video covering controlled flight into terrain. Roger K. Parsons, a research scientist hired by Conoco before it was acquired by DuPont, lost his wife in the crash and has investigated the accident in great detail. He charges that mismanagement of aviation resources by senior DuPont officers and directors was the primary cause of the disaster.

Parsons points out that the inexperienced pilots were poor choices to assign on such a demanding trip. The accident aircraft was not equipped with a ground proximity warning system (GPWS) that was installed on the company’s newer Gulfstream GIV. Pilot training and aircraft equipment were cut to meet budget reduction objectives. Some pilots who voiced concern for diminishing safety standards were fired. One 30-year DuPont pilot wrote letters to the three directors of aviation between 1988 and 1991, to a DuPont chief counsel and to the company CEO, “warning these men that a fatal accident would certainly occur if DuPont continued to require poorly trained and ill-prepared pilots to fly demanding trips of questionable safety.”

I suspect stories like this can be found in many business aviation accidents, but not always due to a company’s willful desire to cut costs and quell descent. It is just as likely to be a lack of oversight; assuming everything is OK because nothing bad had ever happened previously. But how does a company CEO without an aviation background assure oversight of an endeavor he or she is not qualified to judge?

How to Avoid Failure

The crash of Gulfstream GIV N121JM at Hanscom Field (KBED), Bedford, Massachusetts, on May 31, 2014, provided the industry with a wakeup call. The crew managed to get themselves into takeoff position without having verbalized a single checklist, ending up with the flight controls locked and the engines at less than full thrust. They then attempted to unlock their flight controls — which would have been impossible at the speed attempted — rather than abort. They ended up in a fiery crash, killing themselves and everyone aboard. And yet the crew was trained at the premier GIV training center and had received glowing comments in two safety management system (SMS) audits.

As NTSB member (and now Chairman) Robert L. Sumwalt observed: “Although the crewmembers may have become complacent, I have to believe the owners of this airplane expected the pilots to always operate in conformity with — or exceed — their training, aircraft manufacturer requirements and industry best practices. Yet, as evidence showed in this investigation, once seated in their cockpit, these crewmembers operated in a manner that was far, far from acceptable.”

In this case, the owner of the aircraft died at the hands of these complacent pilots. It appears he died thinking he had the best two pilots up front; the training and audits he paid for told him just that. There can be no doubt that these pilots failed to behave in the professional manner that was expected of them. But I also think we as an industry failed these pilots. In the words of Capt. Vanderburgh, “I am so sorry. I did not mean to make you like this.”

I think there is something we can do about this and I take as an example a former colleague of mine who was fired from his position as chief pilot for a startup company in Silicon Valley. Our flight department was stunned when he was hired away from us, as this particular pilot was sloppy in procedure and technique. One day he was our worst pilot, the next he was leading a new flight department carrying around a CEO we all recognized from the daily financial news. This particular CEO must have sensed something, because he invited a pilot from another company to ride along in the jump seat. Our former colleague was in his usual form, ignoring checklists and over-speeding flaps. The report was not flattering, and our former colleague was given a small severance and asked to leave.

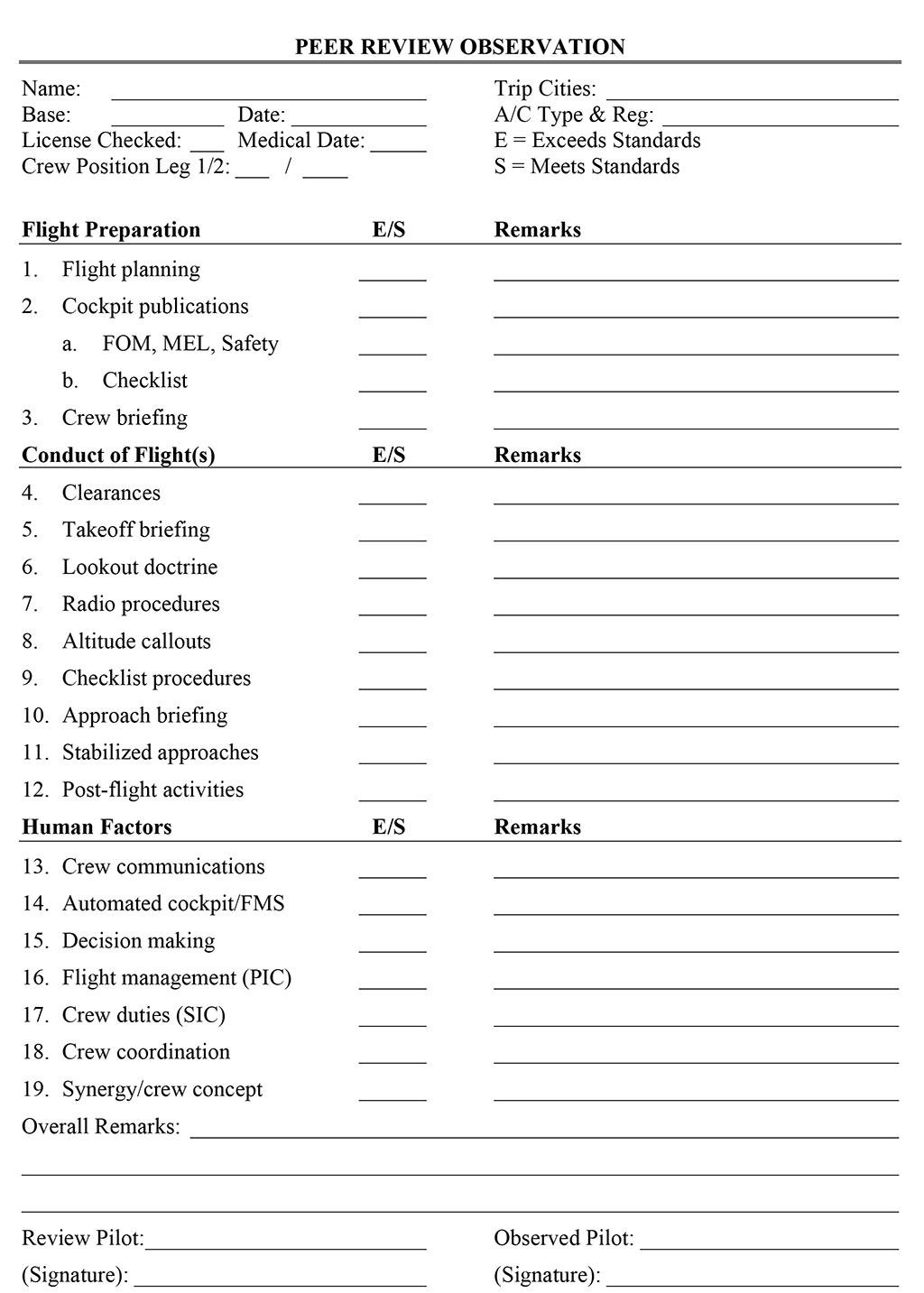

If it sounds like I am advocating our CEOs and owners to start suspecting those they have entrusted with their lives, I am not. I am saying that we pilots should keep an eye on each other and invite critique as often as we can get it. I like to call this a “peer review.”

In practice it goes like this: You invite someone from outside your flight department to join you on an operational trip. You ask them to ride along and observe. After the flight is over, you earnestly ask, “How did we do and how can we do better?” If you choose your peer carefully and if you are operating outside of industry best practices, the critique should provide a wakeup call. When you reciprocate, you have the responsibility of providing honest critique of your own. And if you notice your peer has the potential of ending up in an NTSB report, you will have more work to do.

Of course, inviting a jump seat pilot on a trip with your passengers will require a permission step you might be reluctant to take. But the peer review can be seen as the highest level of professionalism and can go a long way to reassure those in back that the two up front are worthy of trust. There are a few things to do first. Your company probably has a non-disclosure agreement (NDA) for your peer to sign. You might also want to consider a hold harmless agreement (HHA). Finally, a “Peer Review Observation Form” (see the accompanying sidebar) can be useful to guide your peer’s observations.

If you are a crewmember in a flight department, I encourage you to come up with your own peer review program. If you are the company officer responsible for the flight department, I encourage you to explore the idea; it is cheap insurance.

Chairman Sumwalt concluded his remarks about the Bedford GIV with, “You can fool the auditors, but never fool yourself.” I contend it is awfully hard to fool a peer who does the same thing you do for a living.

Comments

“For they stand together before the face of the sun even as the black thread and the white are woven together.

“And when the black thread breaks, the weaver shall look into the whole cloth, and he shall examine the loom also.”

Vanderburgh had it right. Much as we’d like a simple view, the world is rarely that accommodating. We are still in need of Vanderburgh’s lesson, and not just in aviation. Don't forget the loom, especially in slam/dunk pilot error reports. You do so at your peril.